Application of federated XGBoost in outlier detection

Published:

Federated learning technology is rapidly evolving, and the combination of other machine learning methods has become a popular privacy protection model. XGBoost is the “big killer” of machine learning algorithms. In June 2019, the paper “Secureboost: A lossless federated learning framework” proposed the Secureboost federated learning framework, which realized the application of Boosting integrated learning method in vertical federated learning. In another recent paper, “The Tradeoff Between Privacy and Accuracy in Anomaly Detection Using Federated XGBoost”, the authors proposed a method of combining XGBoost with horizontal federayed learning, which was used for credit card transaction anomaly detection and obtained. A good detection effect, find out the privacy protection program to achieve the best training results.

Introduction

Today, many large Internet companies have built large-scale information technology infrastructure to provide customers with multiple services. However, a large amount of data transmission leads to privacy leakage and increased transmission costs: on the one hand, data transmission between different enterprises is likely to cause privacy leakage; on the other hand, data transmission will greatly increase communication costs. In this context, federated learning technology came into being. Federated learning does not transmit raw data, but instead transmits a pre-trained learning model from the client to the server, effectively protecting user privacy.

With the promotion of federated learning techniques, the combination of federal learning and other machine learning methods has become a popular approach, such as logistic regression, tree models, and so on. In June 2019, the paper “Secureboost: A lossless federated learning framework” realized the application of Boosting integrated learning method in vertical federation learning. This article continues to expand federated learning, hoping to achieve a combination of horizontal federated learning and XGBoost integrated learning, and used in bank credit card abnormal transaction detection.

Executing the XGBoost algorithm in the framework of federated learning is to calculate the parameters and for updating the overall model on each local data node , and then transfer the parameters to a central server to update the overall model. The central server then selects an optimal partitioning method to pass the updated model back to each local model. This method is effective for vertical federated learning scenarios. However, the horizontal federated learning scenarios involved in the paper “The Tradeoff Between Privacy and Accuracy in Anomaly Detection Using Federated XGBoost” do not apply: horizontal federated learning for each local data node has a different data distribution, plus the sample is extremely asymmetric for training. The effect of the sample distribution on the results will be more pronounced. If the parameters of each node are simply updated as described above, regardless of the distribution of data of different nodes, the resulting training model may be unreasonable.

Method

In order to solve the above problems, this paper proposes to transmit user features in a transparent and efficient manner, mainly through the following two steps.

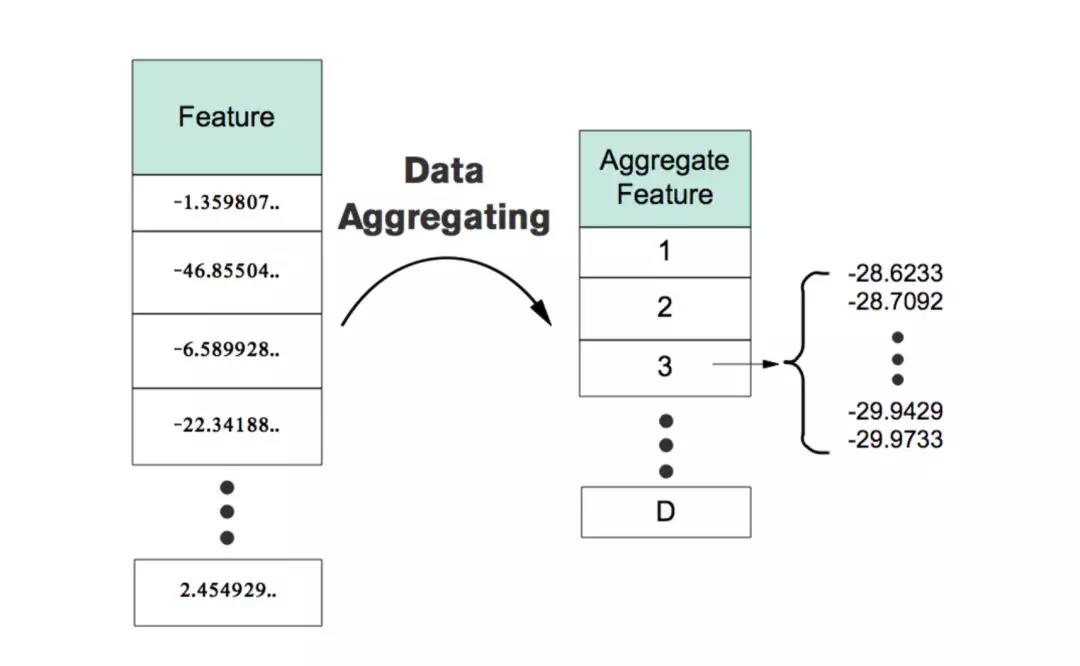

Data aggregating

The first is to aggregate the raw data and map a batch of similar samples into a virtual data sample. Considering the first virtual sample , we sum and (∈) in this virtual sample to obtain the update parameters and of the virtual sample, and the other virtual samples perform the same operation. Then, the update parameter corresponding to each virtual sample is transmitted to the central server to update the total model, and the central server returns the updated model. It should be noted that this process is returned to each raw data node, not a virtual data node. Because data aggregation is used in this process, data leakage may be involved. To solve this problem, the author used Modified k-anonymity to protect the data.

K-anonymity is to publish low-precision data through generalization and concealment techniques, so that each record has exactly the same attribute value as other k-1 records in the data table, thus reducing the risk of data privacy leakage. In this article, since the local node is transferring the model parameters to the server, we use the improved Modified k-anonymity: the model parameters of each node are transmitted to the central server instead of the original data.

Sparse federal update

In actual transmission operations, the transmission of data is inefficient due to excessive data volume, and not all data has an updated value. Therefore, in order to better update the model, the data needs to be filtered. Although the XGBoost model is good for outlier prediction, there are still many samples that cannot be correctly classified. A new perspective is presented in this paper. Training samples that were misclassified in previous learning should receive more attention in subsequent learning and be transmitted to the server for federated updates. Because the training samples that are misclassified in learning are more valuable than others, it helps the model to better self-improve. Secondly, because the data in the anomaly detection is extremely unbalanced, the XGBoost algorithm can solve the skewness problem to some extent. In addition, if the correctly classified training samples are not filtered out, these samples will affect the splitting and construction of the tree model during the federal update process, which will adversely affect the model improvement.

Experiment

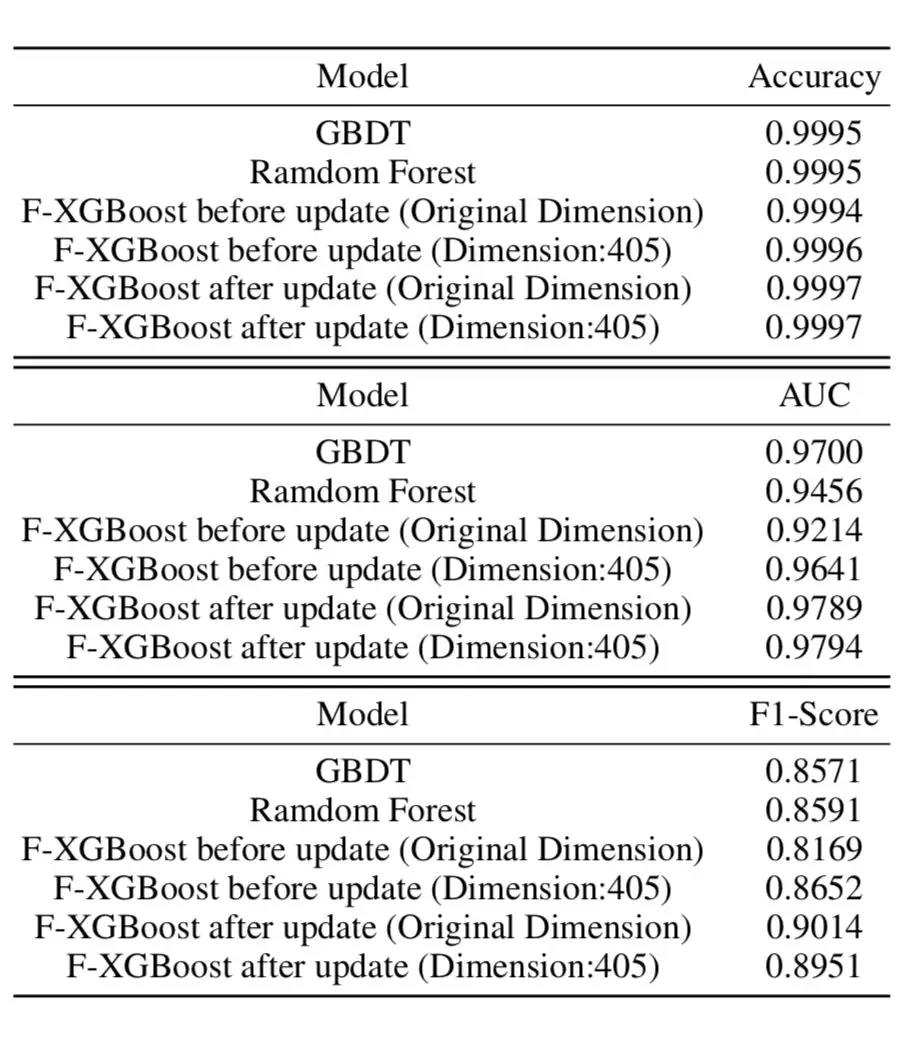

The article applies the federated XGBoost algorithm to a set of credit card data to detect credit card anomalies to test the effects of the algorithm. This set of data contains 284,807 samples and 30 features, and 492 cases of abnormal samples in all samples, only 0.172% of the total number of samples (that is, the case where the sample distribution mentioned above is extremely uneven). In the empirical use, the common federated XGBoost, GBDT, random forest, federated XGBoost after data aggregation and federated XGBoost after sparse gradient update are used to calculate the training effects and compare them. The main conclusions are as follows.

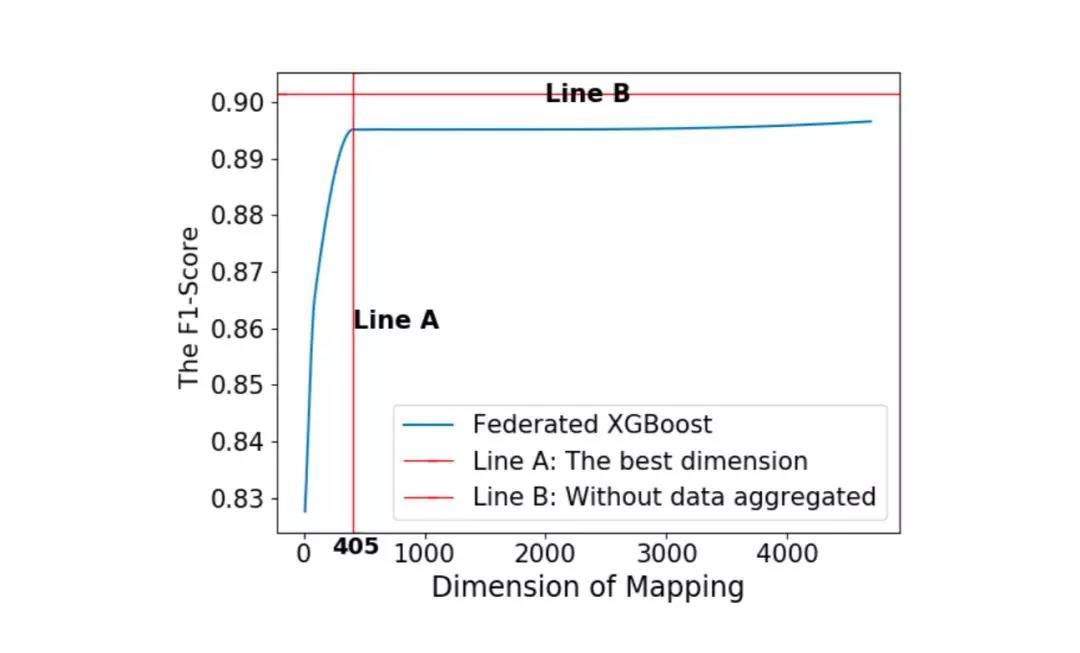

- (1) Model users need to balance the privacy protection and training effects. The optimal number of virtual data sets for privacy protection is 405. The larger the virtual data set, the better the protection of data privacy. However, there is a trade-off between privacy protection and model training. As shown in the figure below, the horizontal axis represents the number of virtual data sets, and the vertical axis represents F1-Score. As the number of virtual data sets increases, the privacy protection capability decreases, and the F1-Score is rising, and the training effect is better. By observing the dynamic changes between the two, the author selects the 405 represented by the straight line A as the optimal number of virtual data sets. At this time, the F1-Score is higher and the protection of data privacy is stronger. If you choose a value slightly smaller than 405, although the privacy protection ability becomes stronger, the training effect is obviously reduced; if you choose a value slightly larger than 405, the privacy protection ability is weakened, and the training effect is only slightly improved, so 405 is the most The number of excellent virtual data sets. The straight line A in the figure represents F1-Score when the number of clusters is 405 is 0.895105, and the straight line B represents F1-Score in the case of no data aggregation, which is 0.901408.

- (2) After selecting the appropriate data aggregation scale, the federated XGBoost using data aggregation and the federated XGBoost with sparse gradient update can significantly improve the training effect. Because the distribution of the sample itself is extremely uneven, it is unreasonable to use Accuracy to measure the training effect. This paper compares the training effects of different algorithms by comparing AUC and F1-Score. For F1-Score, with the random forest, GBDT, common federated XGBoost, the federated XGBoost (whether or not data aggregation) using the sparse federated update method is significantly better. For the AUC value, it can also be seen that the use of the data aggregation and the sparse federated update of the federated XGBoost has achieved better results than the other algorithms mentioned above.

Innovation and future prospects

The main innovations of the paper are:

- XGBoost for horizontal federated learning scenarios.

- The use of data aggregation methods enhances privacy protection.

- The use of sparse gradient update improves transmission efficiency and training effectiveness.

In the future, authors may try more privacy protection methods to train and improve the empirical details to achieve more significant training results.